![[logo]](/images/SimplyGenius.png)

Simply Genius .NET

Software that makes you smile

Petri Dish: Multi-threading for performance in C#

|

|

Publicly recommend on Google

|

Table of Contents

Introduction

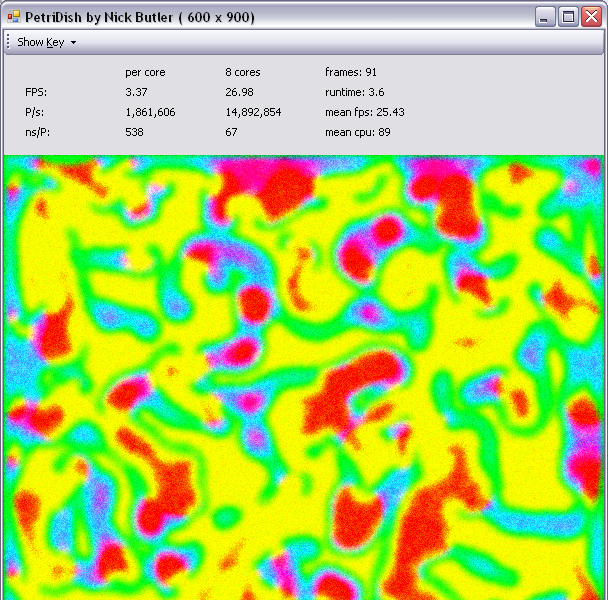

"PetriDish" is an example of concurrent programming for performance. It is a simulation, with three categories of organisms, all growing, mating, and eating each other. It is based on absolutely no ecological theory. I just made it up. It does, however, serve as a useful experiment.

First, I will quickly describe the model, and then I will delve into the multi-threading.

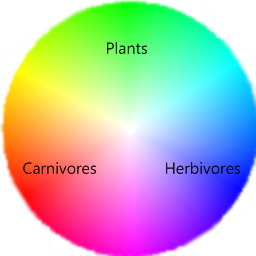

1 Model

As you can see from the key, the three groups are: plants, herbivores, and carnivores. The core equations represent the equilibrium between these groups. If you run the app, you will see green areas as plants grow. These turn blue as herbivores thrive. Then, red areas appear as the herbivores are eaten by a growing carnivorous population. The yellow areas are an unstable equilibrium with few herbivores. The carnivores die off, plants regrow and the cycle starts over.

Each pixel, or cell, has three byte values representing the population of each of the three categories. The frames are generated iteratively. That is to say, each frame depends only on its predecessor, plus some random instability.

1.1 Core Equations

The core equations are quite simple. For each pixel, they take the values: gn, bn, and rn which represent the existing plant, herbivore, and carnivore populations, respectively. The results: gn+1, bn+1, and rn+1 are the populations for the next frame.

gn+1 = gn * 1.1 - bn * 0.1 - rn * 0.01 - Randomg bn+1 = bn * 1.1 + gn * 0.1 - rn * 0.09 - Randomb rn+1 = rn * 1.1 + bn * 0.1 + gn * 0.00 - Randomr

The first term in each equation increases the existing population by 10%. This is supposed to represent reproduction.

The last term in each equation represents deaths. It is a random value to introduce some instability into the model. For the plants, this value is between -42 and 42. For both the herbivores and carnivores, it is between 0 and 42.

For the plants, the middle two terms represent being eaten by herbivores ( 10% ) and carnivores ( 1% ).

For the herbivores, the two terms represent eating plants ( 10% ) and being eaten by carnivores ( 9% ).

The carnivores eat herbivores ( 10% ), but aren't effected by the plant population.

The last thing to say about these equations is that they are only loosely based on the real world. My motivation was to generate pleasing patterns, so I tweaked them until I was happy with the results. Don't base your ecology PhD on them!

1.2 Existing Population

The existing populations used by the equations depend on the populations in the surrounding pixels. In the download, a 5 x 5 grid is used. The influence of a cell is weighted by its distance from the centre cell, although you can turn this off and just use the mean average over the grid. Removing this calculation doesn't overly effect the resulting images, and increases performance by about a third.

The viewport is surrounded by a meadow where there are no carnivores or herbivores, but a population of 75% of plants. Well, that's how the edge cases are handled, anyway.

1.3 Oases

There are 25 small oases, placed as a 5 x 5 grid in the viewport. The plant population inside each oasis never falls below 42.

1.4 Instability

The model proved to be too stable and predictable, so some random instability was introduced. This was done by delaying the iteration of some parts of the viewport by a random amount between 5% and 15%.

The viewport is split up into 100 x 100 pixel squares. Three quarters of these squares are randomly chosen to be delayed. The delay is achieved by just not calculating the next iteration if a random value is below the threshold.

1.5 Summary

The resulting images exhibit enough instability to be interesting while retaining some cohesion. There is an initial period of about 1000 frames that is quite predictable, but then, each run settles into a pleasing random cycle.

2 Multi-threading

Initially, the thread control was implemented using Microsoft's Parallel Extensions Library. This made life a little easier, but unfortunately, performance was terrible. I must note that I am using the first CTP, released in December 2007, so the library has not yet been optimised. However, I think the real problem was the size of the work units. The program only takes in the order of 10-6 seconds, or roughly 1000 clock cycles, per pixel per core. This is quite a small unit of work, and it is swamped by the overhead of the library. So in the end, I handled the threads manually, with much better results.

This article is about multi-threading for performance, so we need some metrics. The base metric I used was the time taken to calculate one pixel using a single thread. This eliminates all the overheads introduced by multi-threading, so it gives the minimum time required by my machine to execute the calculations. Without using weighting, the figure is 310 nanoseconds per pixel per core. With weighting, the figure is 460 ns / pixel / core. I will use both options in the rest of this article, to illustrate different points.

2.1 User Interface

The UI is quite simple. When a frame is produced, it updates its metrics and invalidates itself. It overrides the OnPaint method to draw directly on the screen using the Graphics object.

The UI lets the Engine know that a frame has been painted, by calling Engine.Painted(). Even if the Engine has calculated the next frame, it will not continue until the previous frame has been painted. In practice, this rarely causes a wait, as the UI is much faster than the Engine.

2.2 Engine

The engine code is all in a static class called Engine:

partial static class Engine { public static event EventHandler Update; public static Bitmap Bitmap { get { ... } } public static void Painted() { ... } }

The UI primarily interacts with this class through these three members: the Update event, the Bitmap property, and the Painted method. When a frame is ready in the Bitmap, the Engine fires the Update event. In response, the UI invalidates itself. When the screen has been updated in the OnPaint override, the UI calls Painted to let the Engine know it can continue.

2.3 Buffers

Two Int32 arrays are used as buffers, and they are toggled on each frame. So, for one frame, BufferA is the source and BufferB is updated. For the next frame, the buffers are switched. This saves copying the results of one frame to the source buffer of the next frame.

This architecture has another advantage: there is no shared mutable state. This is very important for performance. Although all the threads read from the same input buffer, each thread is responsible for writing only its own portion of the output buffer. This is important because it means that no synchronization is required between the threads while the next frame is being calculated.

The output buffer is then copied to the Bitmap and the Update event is fired. This code is protected by a lock, which is also used in the UI to prevent concurrent access to the Bitmap. Since the UI is updated after the Bitmap is written, there isn't much contention for this lock. It is mainly there for correctness.

I put this work in a separate thread, because it is sequential and got in the way of the concurrent calculations.

2.4 Amdahl's law

Gene Amdahl published a paper in 1967: "Validity of the single processor approach to achieving large scale computing capabilities". He realised that every program has a sequential part and a concurrent part. His law states that the execution time of a program is limited by the time spent executing sequentially. In other words, even if you have an infinite degree of parallelism, your program will still be limited by the time it takes to execute the sequential part.

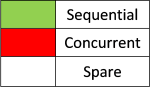

Here is the key we will use:

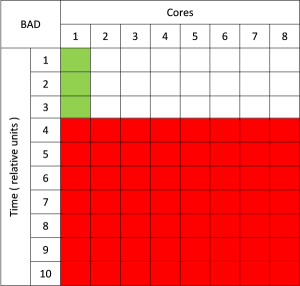

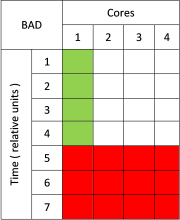

Consider a machine with eight cores. In 10 units of time, it is capable of executing 80 units of work. But if 3 of those units of work must be executed sequentially, we lose 3 x 7 = 21 units of work, which is about 25% of the available power:

I will now derive an equation that describes this law. Consider the simple example below. There are 16 units of work and 4 cores. 4 units of work are sequential, so the ratio of sequential : total work is 4 / 16 = 25%. The clock time is 7 time units, so the capacity is 7 x 4 = 28. So, the ratio of actual work to capacity is 16 / 28 = 4 / 7. Multiply this ratio by the number of cores, and we get 16 / 7 = 2.286. This is the number of cores that are actually being used.

So, given:

N = number of cores S = ratio sequential : total C = ratio concurrent : total = ( 1 - S ) W = total work

The actual work is given by N x W. The amount of work done sequentially is S x W, and concurrently is C x W. The capacity is, therefore, N x S x W + C x W. We want the ratio of actual work to capacity, so cancel W, and you get this equation:

![]()

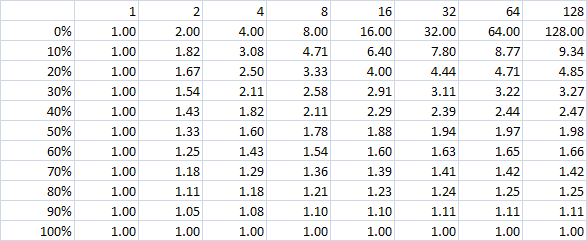

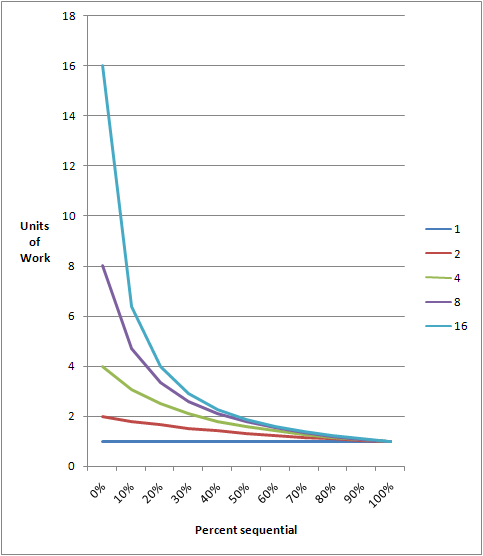

Here is a table and graph of this equation for 1 to many cores:

As you can see, it doesn't matter as much on a two core machine. You can only lose one core at worst, which is 50%. However, on a 16 core machine, you will lose over 50% of the capacity, or 9 cores, by having just 10% of your work executing sequentially. The more cores that are available, the worse the effect of any sequential component.

My point is that any substantial sequential execution will waste the capacity you are trying to put to good use. This effect only gets worse with increasing numbers of cores.

2.5 Buffer Thread

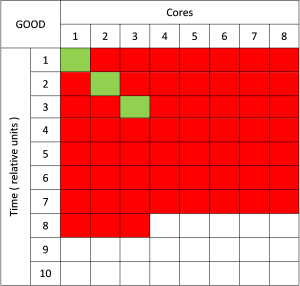

The concurrent part of this code is the calculation of the output buffer. It runs the same code across as many cores as possible. The sequential part is the copying of the output buffer to a bitmap. These two actions can actually run at the same time because they are both reading from one buffer. The read buffer is immutable while the output buffer is being written.

So, I spun off a thread to just handle the bitmap. It doesn't have much work to do and doesn't take up much capacity, but it will virtually eliminate the sequential part of the main loop. In fact, it will almost always finish its work before the next frame is ready.

As you can see, the clock time per frame has reduced by 25%, which means a 25% increase in frame rate for very little effort.

2.6 Synchronization

The question now is when does the buffer thread start copying the next frame? The answer is that the master thread tells it to, using an AutoResetEvent. Time for some code:

// Events partial class Engine { static AutoResetEvent _ToggleEvent = new AutoResetEvent( true ); static AutoResetEvent _UIEvent = new AutoResetEvent( true ); static AutoResetEvent _BufferEvent = new AutoResetEvent( false ); } // The master thread static void Master() { for ( ; ; ) { Work(); _UIEvent.WaitOne(); _ToggleEvent.WaitOne(); _BufferToggle = !_BufferToggle; _BufferEvent.Set(); } } // The buffer thread static void BufferMaster() { for ( ; ; ) { try { _BufferEvent.WaitOne(); UpdateUI(); } finally { _ToggleEvent.Set(); } } }

When the threads start, the buffer thread immediately waits for the _BufferEvent. The master thread goes ahead and calculates the first frame. When it is done, it goes through the waits for the _UIEvent and _ToggleEvent because they are created in the signaled state. It then toggles the buffers, and signals the _BufferEvent to release the buffer thread. Both threads then proceed. The master thread starts calculating the next frame while the buffer thread copies the last frame to the screen.

Usually, the buffer thread and the UI complete their work before the master thread has finished the next frame. If this is the case, the master thread does not have to wait before toggling the buffers again. However, if, for some reason, the UI hasn't been updated, the master thread will not continue until this work is finished. This ensures that every frame is shown in the UI, which eliminates flicker.

This architecture is correct, while minimising waits in the master thread. Synchronizations do take a small amount of time, even when the event is already signaled. However, there are only three events used per frame. Each frame takes roughly 30 ms, which is an age to modern processors. What you want to avoid is synchronization in tight loops, where the overhead will be noticed.

2.7 Work Threads

The Work method below is called once per frame by the master thread we just saw:

// Work thread ( naive ) unsafe static void Work() { _ThreadCounter = ThreadCount; _ThreadSemaphore.Release( ThreadCount ); _ThreadEvent.WaitOne(); }

The ManualWork method is used by all the worker threads to calculate the next frame:

// Worker thread ( naive ) unsafe static void ManualWork( object o ) { int thread = ( int ) o; TLS tls = _ThreadLocalState.Current; for ( ; ; ) { _ThreadSemaphore.WaitOne(); try { fixed ( Int32* read = &ReadBuffer[ 0 ] ) fixed ( Int32* write = &WriteBuffer[ 0 ] ) { int start = _ThreadStarts[ thread ]; int end = _ThreadEnds[ thread ]; for ( int i = start ; i < end ; i += 1 ) Next( i, tls, read, write ); } } finally { if ( Interlocked.Decrement( ref _ThreadCounter ) == 0 ) _ThreadEvent.Set(); } } }

This method uses unsafe pointers to optimise memory access, but I will refrain from detailing the optimizations I made to increase performance. If you are multi-threading for performance, you often want to optimize your code as well. The two tasks normally go hand in hand. But, since this is an article about concurrency, I won't say any more about optimization.

The worker threads are released using a Semaphore. This is a synchronization primitive that allows control of many objects. In this case, it controls all the worker threads, of which there is one per core.

Each thread does its allotted work, and then decrements a shared counter. When this counter reaches zero, all the threads have completed and the Work method is signaled to allow it to return to the master thread.

2.8 Load Balancing

The work in the method above is distributed evenly over all the threads. So, each thread is responsible for calculating the same number of pixels. While this is simple, it is not good enough for a real program. There is no guarantee that every thread will be given the same amount of processor time by the kernel scheduler. In reality, on a physical multi-core machine, this is actually very unlikely. In this implementation, all the threads must wait for the slowest one to complete.

I timed the fastest and slowest threads, and the results were surprising. The fastest thread was taking about 450 ns per pixel, and the slowest about 620. That is nearly 40% difference! It's real world results like this that you can only discover by using a physical many-core machine.

The solution was to implement a load balancing enumerator.

By the way, the TLS class is from another of my articles: Thread Local Storage. Basically, it provides storage that is local to a thread, but that is also available from other threads. This allows them to keep track of the overall progress, among other things.

// Work thread ( balanced ) unsafe static void Work() { _BalancingMaster.Reset(); _ThreadCounter = ThreadCount; _ThreadSemaphore.Release( ThreadCount ); _ThreadEvent.WaitOne(); } // Worker thread ( balanced ) unsafe static void ManualWork( object o ) { TLS tls = _ThreadLocalState.Current; for ( ; ; ) { _ThreadSemaphore.WaitOne(); tls.Enum.Reset(); try { fixed ( Int32* read = &ReadBuffer[ 0 ] ) fixed ( Int32* write = &WriteBuffer[ 0 ] ) { foreach ( int i in tls.Enum ) Next( i, tls, read, write ); } } finally { if ( Interlocked.Decrement( ref _ThreadCounter ) == 0 ) _ThreadEvent.Set(); } } }

The balancing master is a thread-safe object that gives out chunks of work to the enumerators. There is one enumerator per thread, so these don't have to be synchronized. Each one gets a chunk of work, then yields those values, one at a time, to the ManualWork method. When a chunk is finished, the enumerator gets another one from the balancing master. Here is the implementation:

// BalancingMaster class BalancingMaster { public static readonly int MAX = X * Y; volatile int _Current = 0; object _Key = new object(); public void Reset() { _Current = 0; } public int Next { get { lock ( _Key ) { if ( _Current >= MAX ) return -1; int current = _Current; _Current += BalancingChunk; return current; } } } } // BalancingEnumerator partial class BalancingEnumerator : IEnumerator<int> { BalancingMaster _Master; int _Base = 0; int _Index = BalancingChunk; bool _Finished = false; public void Reset() { _Base = 0; _Index = BalancingChunk; _Finished = false; } public int Current { get { return _Base + _Index; } } public bool MoveNext() { if ( _Finished ) return false; _Index++; if ( _Base + _Index < BalancingMaster.MAX ) { if ( _Index < BalancingChunk ) return true; _Index = 0; _Base = _Master.Next; if ( _Base >= 0 ) return true; } _Finished = true; return false; } }

As you can see, the BalancingMaster uses a lock in its Next method. However, with a chunk size of 1000, the contention for this lock is almost negligible with eight threads. The advantages of using the whole capacity of the machine far outweigh the overhead.

Using this load balancing resulted in an average speed of 520 ns per pixel per core, which is about the expected value: between 450 and 620. However, the frame rate increased by about 30% because more of the capacity of the machine was being used on each frame. The processor usage jumped from about 70% to about 90%, which is acceptable.

Conclusion

Well, that's about it. I learnt quite a bit exploring this program. The most important thing I learnt is that many-core machines behave very differently from dual core machines. I recommend getting one to play with; it's a new frontier!

I hope you have enjoyed reading this article, thanks very much.

History

- 2008 - 05 - 28: First version.

License

This article, along with any associated source code and files, is licensed under The Code Project Open License.

Contact

If you have any feedback, please feel free to contact me.